Retail technology platform Relex raises $200M from TCV

Amazon’s formidable presence in the world of retail stems partly from the fact that it’s just not a commerce giant, it’s also a tech company — building solutions and platforms in-house that make its processes, from figuring out what to sell, to how much to have on hand and how best to distribute it, more efficient and smarter than those of its competition. Now, one of the startups that is building retail technology to help those that are not Amazon compete better with it, has raised a significant round of funding to meet that challenge.

Relex — a company out of Finland that focuses on retail planning solutions by helping both brick-and-mortar as well as e-commerce companies make better forecasts of how products will sell using AI and machine learning, and in turn giving those retailers guidance on how and what should be stocked for purchasing — is today announcing that it has raised $200 million from TCV. The VC giant — which has backed iconic companies like Facebook, Airbnb, Netflix, Spotify and Splunk — last week announced a new $3 billion fund, and this is the first investment out of it that is being made public.

Relex is not disclosing its valuation, but from what I understand it’s a minority stake, which would put it at between $400 million and $500 million. The company has been around for a few years but has largely been very capital-efficient, raising only between $20 million and $30 million before this from Summit Partners, with much of that sum still in the bank.

That lack of song and dance around VC funding also helped keep the company relatively under the radar, even while it has quietly grown to work with customers like supermarkets Albertsons in the U.S., Morrisons in the U.K. and a host of others. Business today is mostly in North America and Europe, with the U.S. growing the fastest, CEO Mikko Kärkkäinen — who co-founded the company with Johanna Småros and Michael Falck — said in an interview.

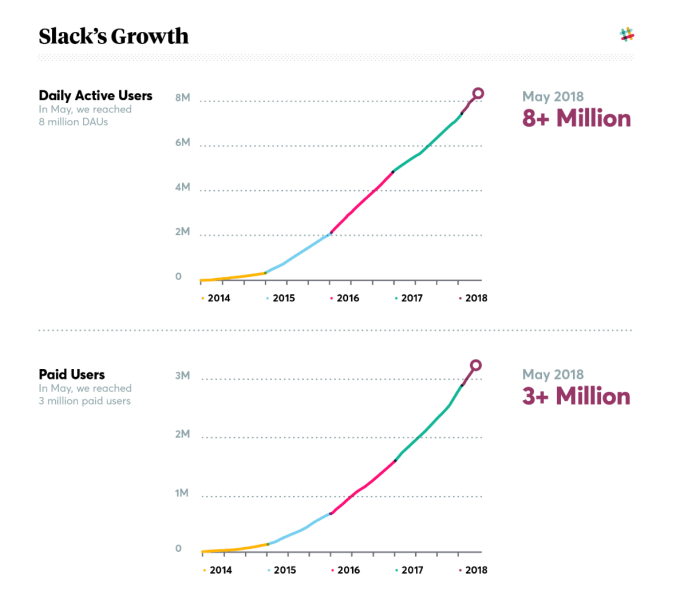

While the company has already been growing at a steady clip — Kärkkäinen said sales have been expanding by 50 percent each year for a while now — the plan now will be to accelerate that.

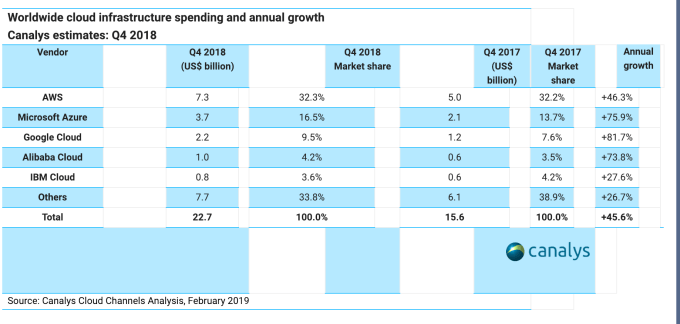

Relex competes with management systems from SAP, JDA and Oracle, but Kärkkäinen said that these are largely “legacy” solutions, in that they do not take advantage of advances in areas like machine learning and cloud computing — both of which form the core of what Relex uses — to crunch more data more intelligently.

“Most retailers are not tech companies, and Relex is a clear leader among a lot of legacy players,” said TCV general partner John Doran, who led the deal.

Significantly, that’s an approach that the elephant in the room pioneered and has used to great effect, becoming one of the biggest companies in the world.

“Amazon has driven quite a lot of change in the industry,” Kärkkäinen said (he’s very typically Finnish and understated). “But we like to see ourselves as an antidote to Amazon.”

Brick-and-mortar stores are an obvious target for a company like Relex, given that shelf space and real estate are costs that these kinds of retailers have to grapple with more than online sellers. But in fact Kärkkäinen said that e-commerce companies (given that’s also where Amazon primarily operates too) have been an equal target and customer base. “For these, we might be the only solution they have purchased that has not been developed in-house.”

The funding will be used in two ways. First, to give the company’s sales a boost, especially in the U.S., where business is growing the fastest at the moment. And second, to develop more services on its current platform.

For example, the focus up to now has been on-demand forecasting, Kärkkäinen said, and how that effects prices and supply, but it would like to expand its coverage also to labor optimisation alongside that; in other words, how best to staff a business according to forecasts and demands.

Of course, while Amazon is the big competition for all retailers, they potentially also exist as a partner. The company regularly productizes its own in-house services, and it will be interesting to see how and if that translates to Amazon emerging as a competitor to Relex down the line.

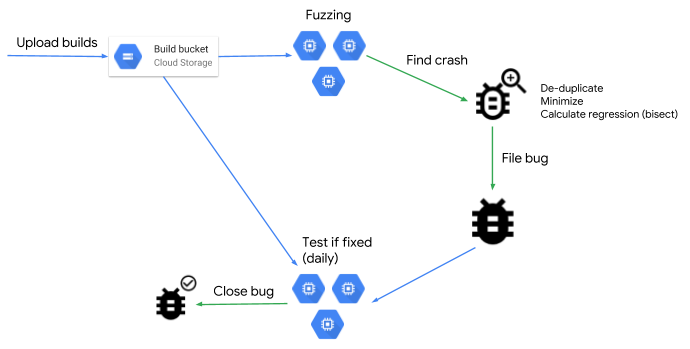

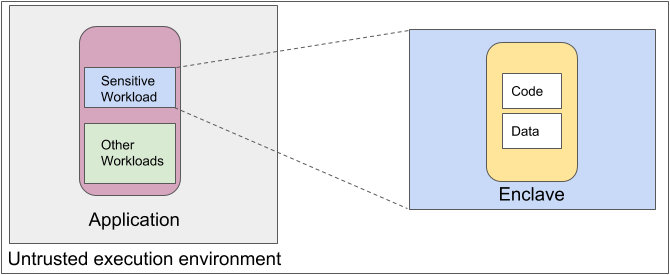

“Together with the industry, we can work toward more transparent and interoperable services to support confidential computing apps, for example, making it easy to understand and verify attestation claims, inter-enclave communication protocols, and federated identity systems across enclaves,” write Garms and Porter.

“Together with the industry, we can work toward more transparent and interoperable services to support confidential computing apps, for example, making it easy to understand and verify attestation claims, inter-enclave communication protocols, and federated identity systems across enclaves,” write Garms and Porter.